Octane’s results vary between runs because it uses generative models alongside rule-based detectors, so each pass can focus its attention on a slightly different slice of your code’s potential behaviors. That variability is a feature, not a bug. It gives you multiple complementary passes through your codebase's attack surface, finding issues that static, rule-based checkers often miss in practice.

Why Octane’s Analysis Varies, and Why That’s Good Security

Octane’s results vary between runs because it uses generative models alongside rule-based detectors, so each pass can focus its attention on a slightly different slice of your code’s potential behaviors. That variability is a feature, not a bug. It gives you multiple complementary passes through your codebase's attack surface, finding issues that static, rule-based checkers often miss in practice.

Different Paths, Deeper Coverage

If you’ve used traditional static analyzers in the past, you’re probably used to one thing more than any other: repeatability. Run the tool on the same code and you’ll always get the same report.

So when a tool like Octane surfaces slightly different findings across different runs on similar code, it’s natural to wonder what changed.

The reason Octane’s results can vary is because it uses generative large language models in addition to traditional rule-based detectors. In practice, each run focuses on an ingestible subset of the codebase’s potential behaviors. The model has to choose where to allocate its finite attention.

That means each pass through the code ends up sampling a slightly different slice of the vulnerability space. Over many runs, the combination of these different slices can surface issues that weren’t seen in earlier passes.

Non-identical results between runs are not a failure of the system; they’re the result of using a generative engine that explores your code in non-identical ways over time.

Multiple Octane runs give you multiple, complementary passes at your attack surface. Every new PR is an opportunity for the engine to approach your codebase from a new angle and identify issues that it may not have picked up earlier.

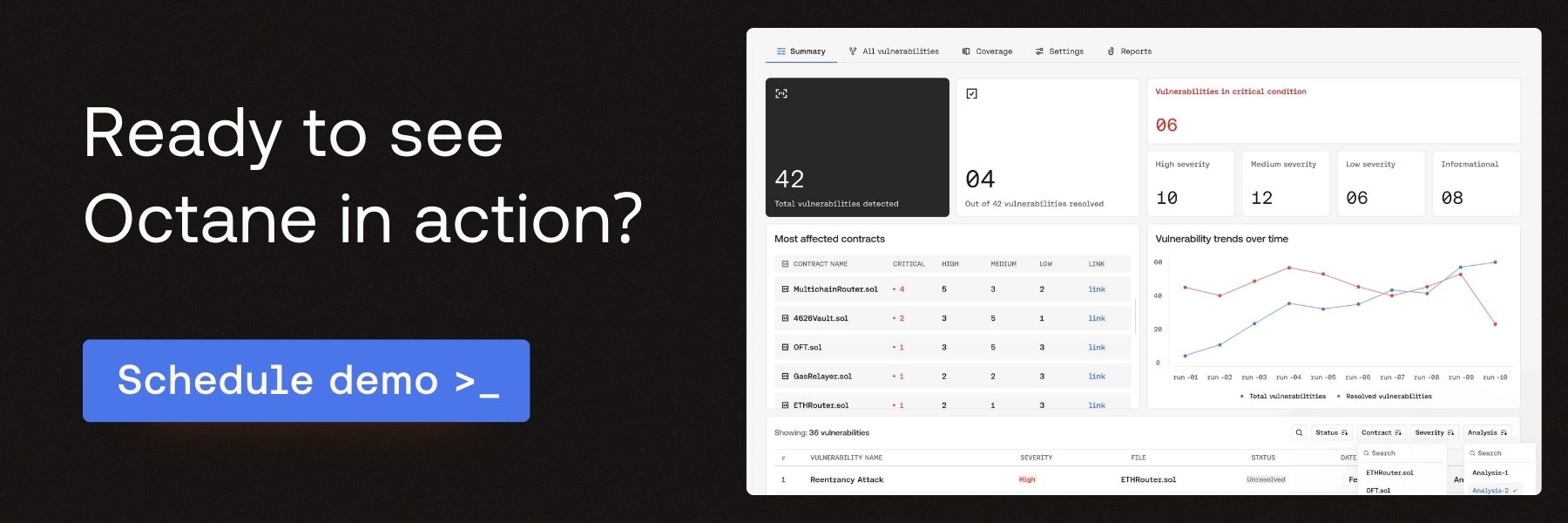

Teams that deliberately re-run Octane or run it automatically in CI see this in practice: additional, distinct findings show up over time. An Octane scan is another set of eyes on the code, and in multiple cases it has found critical bugs that audits and rule-based tools have missed.

Continuous Results

You may be wondering: if results can differ, how do I trust what’s been found? The answer is integration and iteration.

Octane is designed to run in CI/CD pipelines on every pull request. With each new code update or scheduled run, Octane incrementally expands its coverage, catching new edge-case issues as they arise (or as it explores new angles).

Importantly, Octane’s offensive engine is designed so true positives aren’t lost to randomness. It will catch the same critical bugs multiple times in one analysis from different paths and detectors, then deduplicate this into a single stable alert. As more probabilistic runs are attempted, edge-case vulnerabilities from the long tail of the normal distribution get surfaced and pulled into the findings report. This is very similar to how auditors work – some of the best vulnerabilities are captured in the last days of the audit report!

In other words, Octane is engineered for repeatable accuracy even as it explores creatively. You get the best of both worlds: obvious bugs are squashed, and an ever-expanding net catches bugs a one-shot scanner would miss. This is why some of our most successful users like Alan at Covenant come out of contests/competitions bug free – they use Octane across as many PRs as possible and review every single one.

Probable Cause

The top security teams have already shifted their mindset and are leveraging the tools available in this new paradigm. They understand that determinism is comfort, but generative exploration finds truly novel vulnerabilities of the sort that really matter when they get exploited – one might even argue, the types of bugs that are solo Highs or solo Mediums in audit competitions.

Deterministic scanners give you the same output every run, which makes them feel reliable – but really they’re just reliably inaccurate – false positives all over the place and crits, highs, mediums, and even lows consistently missed. They’ll never surprise you with a new finding on their own, because they’ll never catch anything outside their rulebook.

Octane, on the other hand, will surprise you – and that’s exactly what you want from a security tool. The slight variability between scans is the result of the security engine thinking of a new question to ask your code. Attackers thrive on the unexpected, and your security tooling should too.