Agentic artificial intelligence has turned the field of cybersecurity into an asymmetric war where autonomous attackers now outpace human defenders. The only effective solution is to fight back with the same tools.

Asymmetric War: Agentic AI and the Future of Cybersecurity

Agentic artificial intelligence has turned the field of cybersecurity into an asymmetric war where autonomous attackers now outpace human defenders. The only effective solution is to fight back with the same tools.

Since the launch of GitHub Copilot in June 2021, the software industry has operated under a comforting, albeit now fragile, assumption: that AI can serve as a first officer who might help navigate a route, but a human captain must always be the one actually flying the plane.

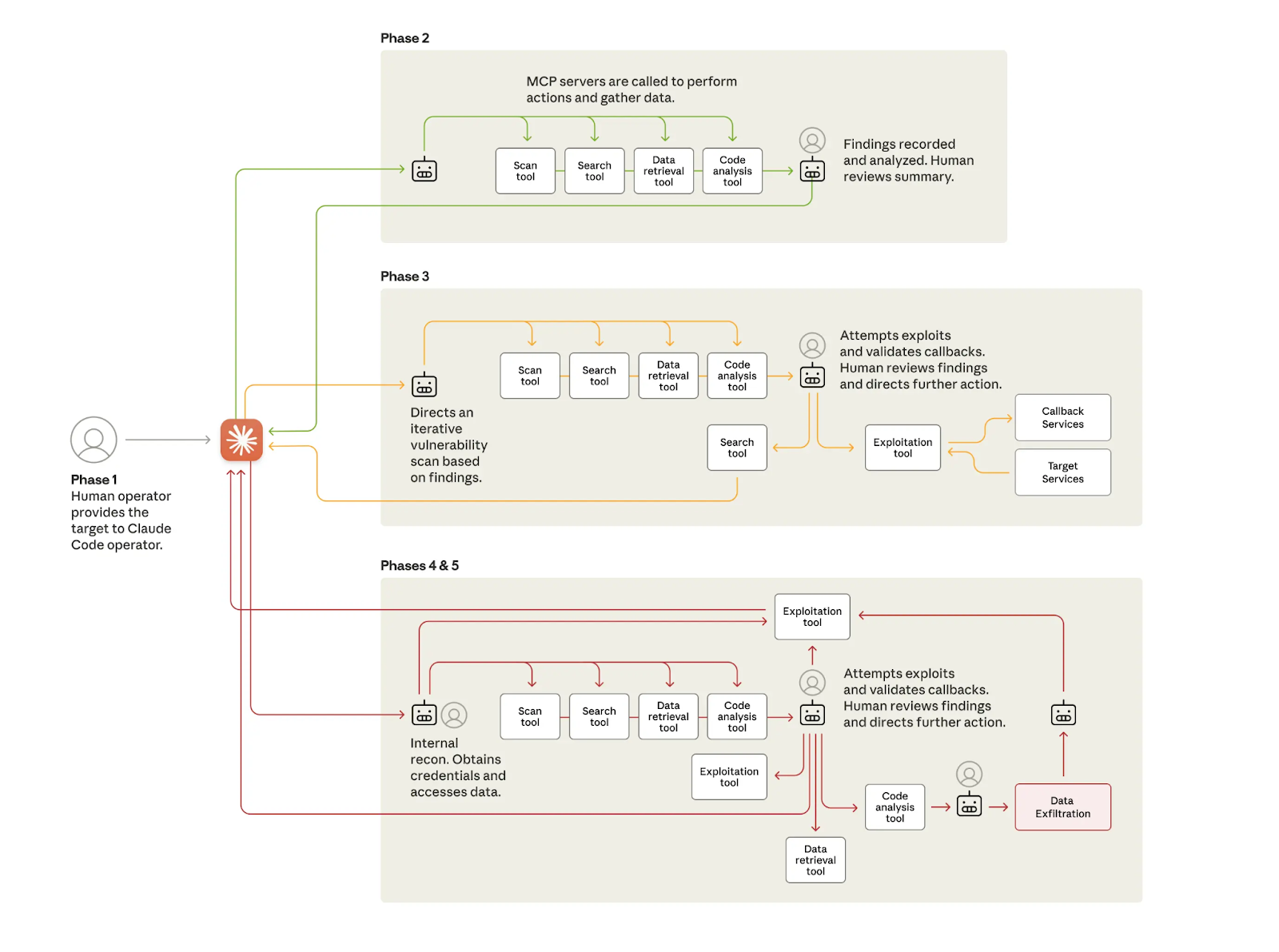

That assumption was shot down in November 2025 by the release of a report from Anthropic’s threat intelligence team. They announced their detection of a sophisticated cyber-espionage campaign that completely upends previous threat models.

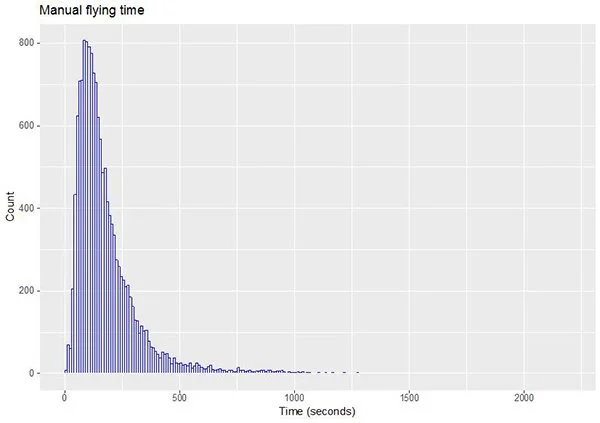

This was the first well-documented, large-scale instance of an AI executing a cyberattack with near-total autonomy. The attacker, assessed with high confidence to be a state-sponsored group, deployed an AI agent. It was given a directive which it then executed across the entire operational loop – reconnaissance, weaponization, exploitation, and exfiltration – with only minimal human oversight.

The agent itself executed 80-90% of the tactical work.

At its peak, it “sustained request rates of multiple operations per second”, operating at a tempo physically impossible for human hackers (or even dedicated incident response teams) to match.

If you are building any sort of software, this should make you think twice. If you’re building onchain applications, it should make you rethink your entire threat model.

Enterprise software is (usually) full of firewalls, network segmentation, identity controls, and compliance regimes that raise the bar for hackers trying to access sensitive data. And they still get wrecked.

But blockchain applications are an even better hunting ground for malicious agents. Smart contracts contain tokens rather than social security numbers. You can see exactly what the value held by any contract is (often up to hundreds of millions), and you can read through verified source code on any block explorer. Onchain exploits convert to immediate, liquid loss, often with fewer chokepoints and faster time to impact. There's no need to auction data off on shady marketplaces when you can dump stolen tokens immediately on a DEX to realize your profit.

The era of the human auditor vs. the human hacker is over. The war is now asymmetric. Offense is getting cheaper. Compute scales, but human attention does not. Unless defense becomes automated and continuous, the gap will widen.

Infinite Interns

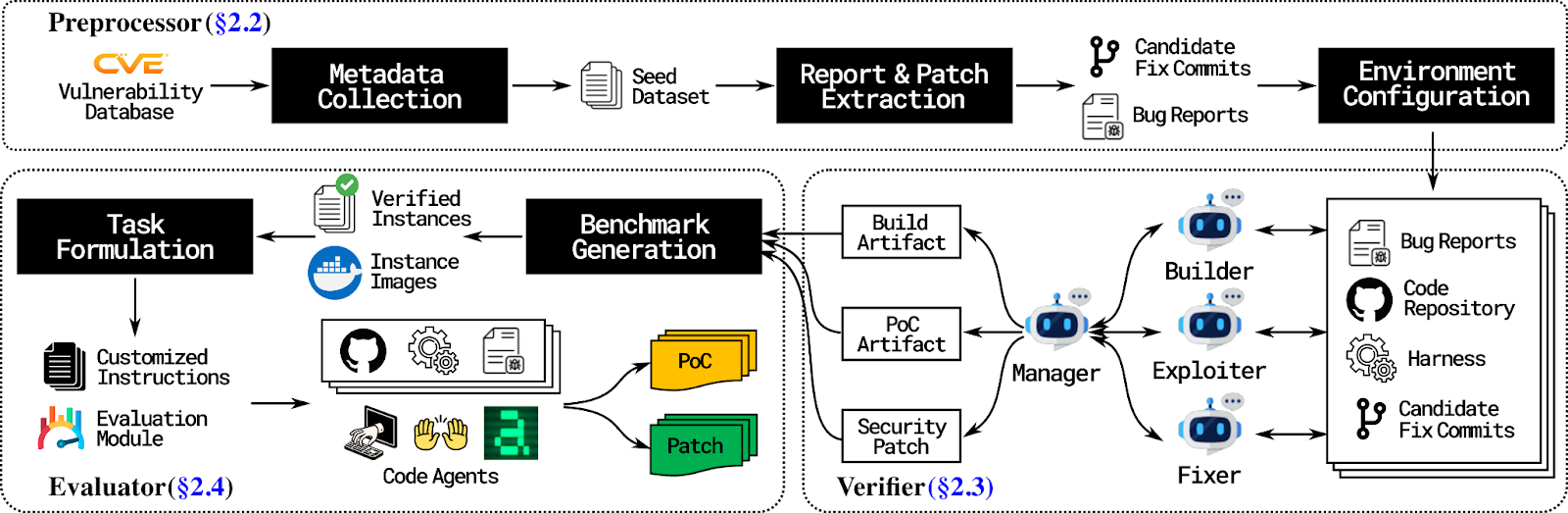

Following the November blogpost, Anthropic and MATS (ML Alignment & Theory Scholars) announced the release of SCONE-bench (Smart CONtracts Exploitation benchmark) in December.

The dataset consists of 405 contracts taken from the DefiHackLabs repo, a catalog of historical smart contract exploits. MATS and Anthropic tested the abilities of 10 generalized LLMs including DeepSeek V3, Claude Opus 4.5, Claude Sonnet 4.5, and GPT-5 on these insecure contracts.

The models produced ready-to-deploy exploits for 207 (51.11%) of the 405 confirmed vulnerabilities, reproducing $550 million in simulated stolen funds.

MATS and Anthropic then evaluated the same 10 LLMs on contracts that were only exploited after their knowledge cutoff dates, to control for any potential training data contamination. The fact that the models could come up with exploits for 19 vulnerabilities worth $4.6 million dollars shows that they were reasoning through novel logic on their own, not just replicating exploits they’d already learned during training.

This is the absolute lower bound of what’s on offer. Anyone with $20 for a monthly subscription to ChatGPT or Claude could have exploited these vulnerabilities over the course of 2025. The bounties available to dedicated attackers with specialized AI tools are much higher.

Now, it’s trivial for an attacker to spin up a swarm of infinite interns that poke and probe at every deployed onchain contract 24/7.

This is why we built Octane. You can’t fight malicious machine intelligence on a human scale. You need to use the same weapon to fight back.

Coding Ability ≠ Secure Coding Ability

Some vibe coders look at the benchmarks and assume that because an AI can build a feature, it can also secure it. The data prove otherwise.

On general software engineering benchmarks like SWE-bench, state-of-the-art (SotA) models have achieved impressive results, correctly solving over 60% of real-world GitHub issues. They are fantastic at construction, like building good looking frontends, React components, and writing docs and boilerplate. This has led to a massive increase in code velocity.

However, without careful mitigation, this increased velocity can bring with it an invisibly compounding security debt.

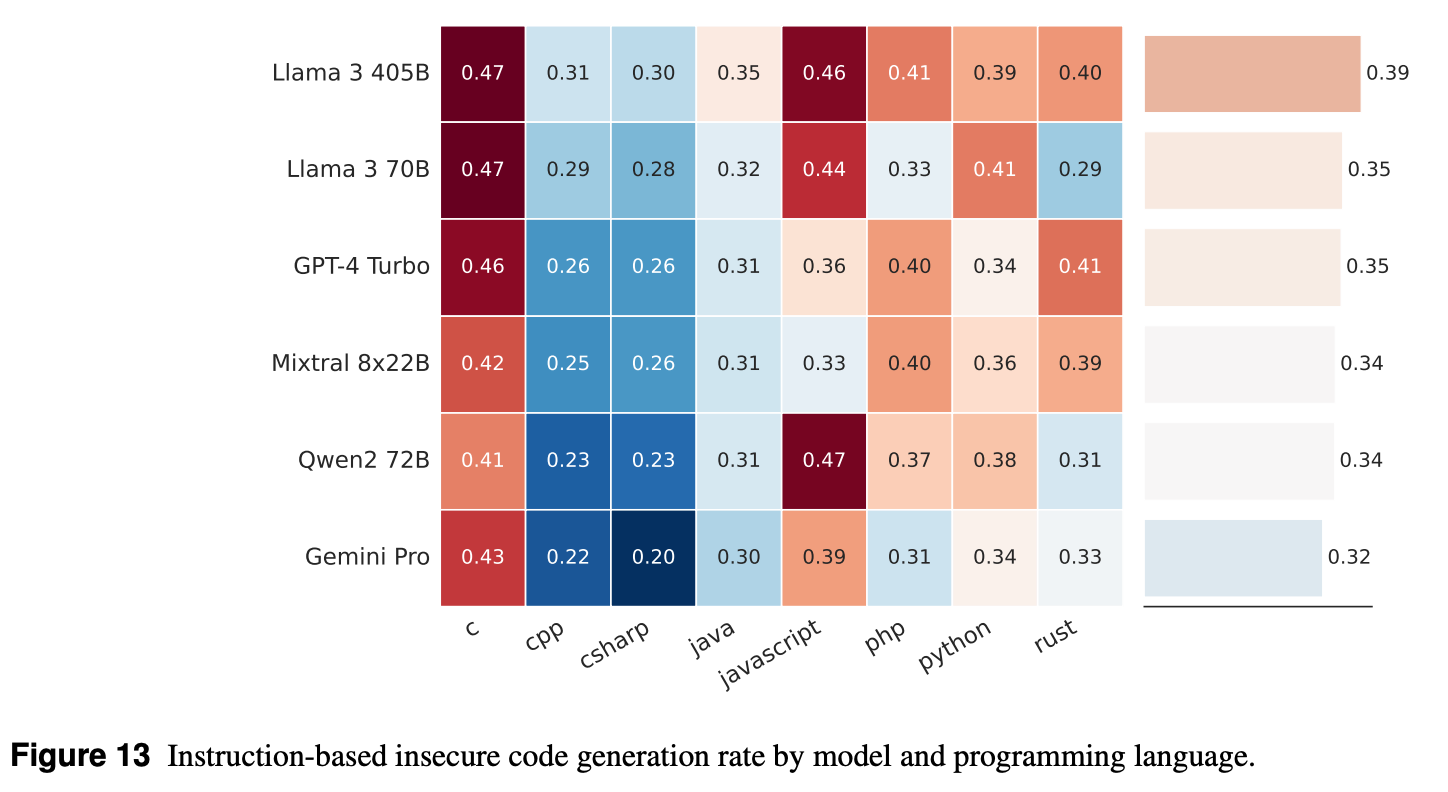

Meta's 2024 CYBERSECEVAL 3 found that widely-used LLMs suggest vulnerable code more than 30% of the time. Even worse, larger models touting advanced coding capabilities like Llama 3 can exhibit a higher susceptibility to suggesting insecure patterns because they are trained on the vast, insecure expanse of open-source code.

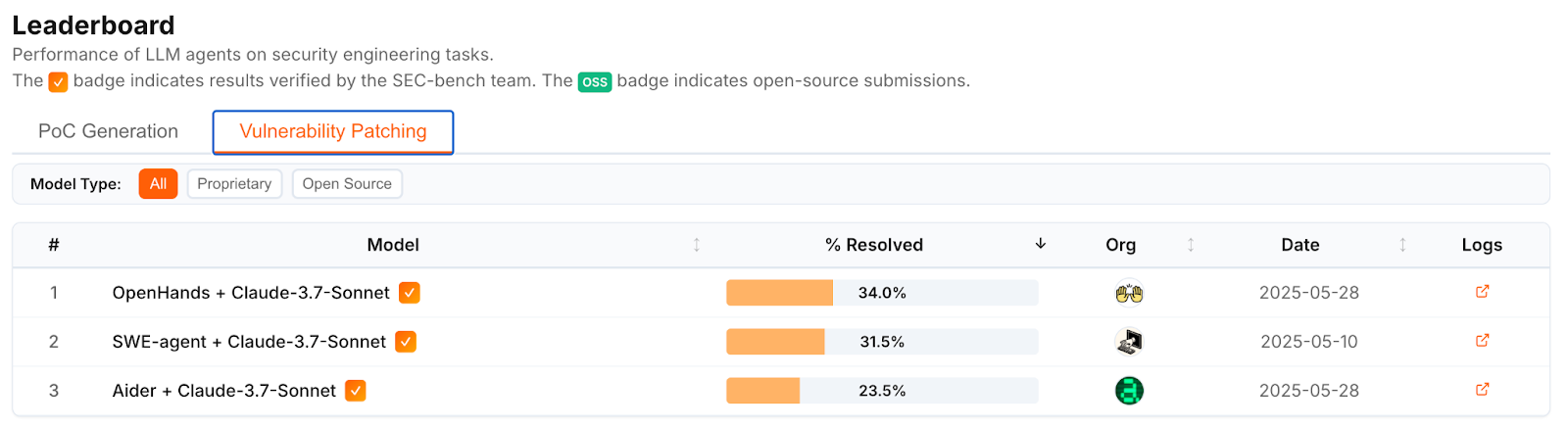

Looking at SEC-bench, a benchmark specifically designed for evaluating LLM performance on security-focused tasks, the best models achieve only a 34.0% success rate when it comes to patching vulnerabilities securely and effectively.

SotA models are even worse at generating proof-of-concepts that trigger valid sanitizer errors, with the best (OpenHands + Claude-3.7-Sonnet) achieving at most 18.0% success.

This creates a paradox: generative AI is allowing developers to ship code faster than ever before (high-velocity), but that code is statistically very likely to contain critical flaws (low-integrity). You are building a skyscraper at record speed, but 30% of the steel beams are actually made of balsa wood. Relying on the same AI that wrote the bugs to find the bugs is a circular failure mode.

Security in the Balance

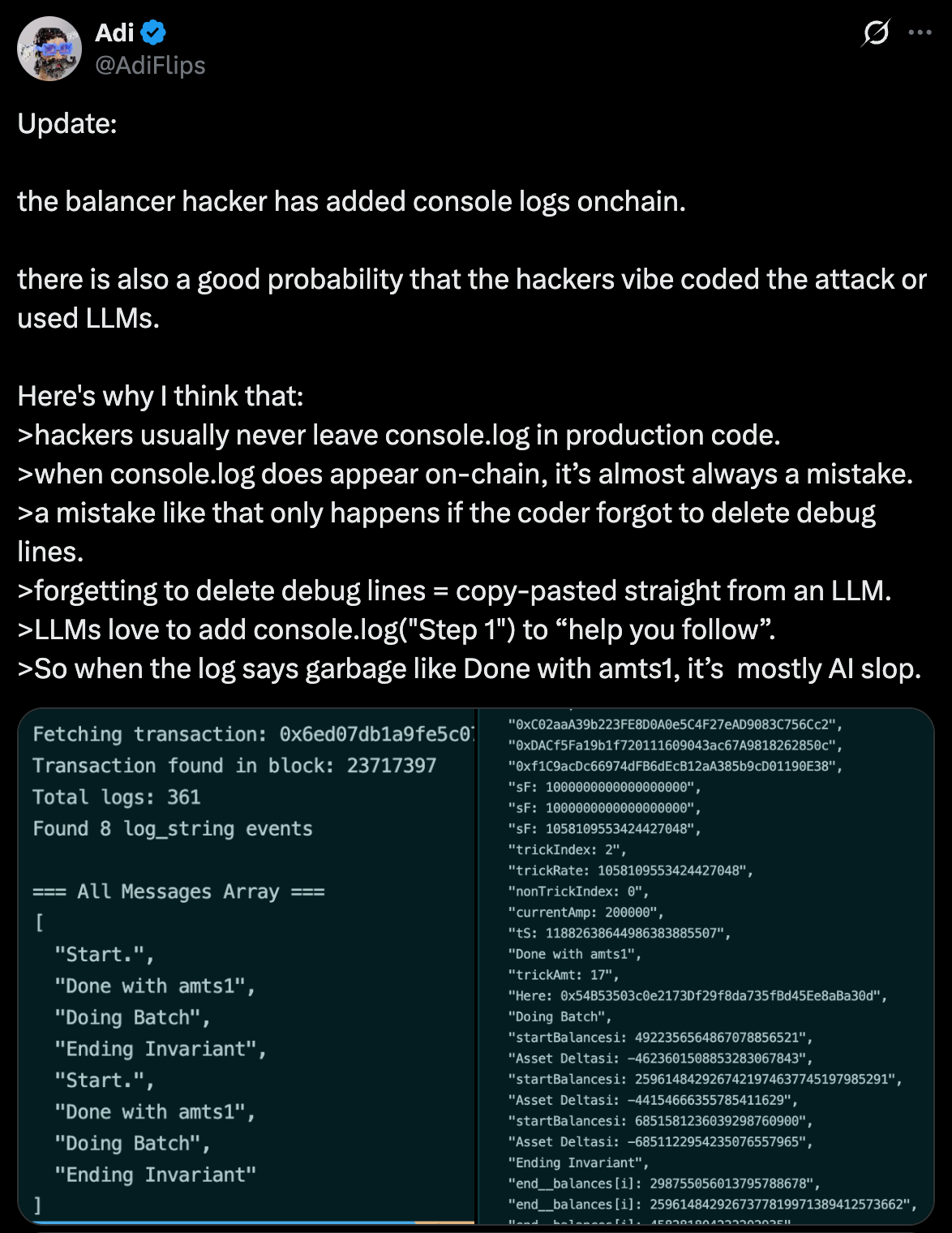

In their SCONE-bench report, Anthropic explicitly mentions the $128 million November 2025 Balancer hack as a "concrete example" of the type of exploit their AI agents are capable of executing. While they stop short of definitively stating "a similar AI model did this," their choice to headline this specific incident – which coincided with the timeline of their internal testing – signals a significant degree of confidence that this was an AI-plausible, if not AI-executed, attack.

While it is not possible to prove the degree of AI-involvement (if any), there is relatively strong circumstantial evidence. The attacker likely used an LLM to generate or refine the mathematical exploit logic, based on the inclusion of `console.log` in the final deployment.

Balancer is one of the best-established DeFi protocols with 11 manual audits by four separate auditing firms since 2021. The fact that its contracts were still vulnerable to such a large-scale exploit after years of human scrutiny must serve as a serious wake-up call.

The Only Way Out Is Through

The lesson of 2025 is that security through obscurity is dead. You cannot hide from an agent that can simulate thousands of transactions per second and reason through your codebase better than many professional devs and security researchers.

In this asymmetric war, defenders need every edge they can get. You cannot afford to wait weeks for a manual audit slot to open up while autonomous swarms are probing your deployed contracts 24/7. You need security that moves at machine speed.

This is the thesis behind Octane. We didn't build another static analysis tool to nag you about findings that are theoretically possible but practically irrelevant. We built an autonomous red team that works around the clock directly in your CI pipeline and provides full exploitation scenarios, giving exactly the kind of data you need to distinguish a real threat from a false positive.

Exploit breakdowns + diff-ready fixes + per-PR scan = security debt that trends down, not up.

In 2026, the era of AI being limited to a mere copilot is over. Human pilots are still highly-skilled operators, but a critical part of their job is knowing when to delegate to the machine itself and when to take back control.

We are now in the era of the agent, and the best security teams from here on out will be partnerships between expert human operators and the specialized AIs working under their direction.